User Testing is Shaping DOL’s Retirement Lost and Found Site

“The fundamental purpose of any job-based retirement plan is to pay promised benefits to the workers who participate in those plans.”

- Assistant Secretary for Employee Benefits Security, Lisa M. Gomez

The Employee Benefits Security Administration (EBSA) is part of the U.S. Department of Labor (DOL). Most Americans likely haven’t heard of EBSA or are aware of their role in their enforcement efforts such asand their role in recovering more than $7 billion in missing retirement benefits for U.S. citizens who were ‘missing’ plan participants and their beneficiaries.

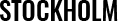

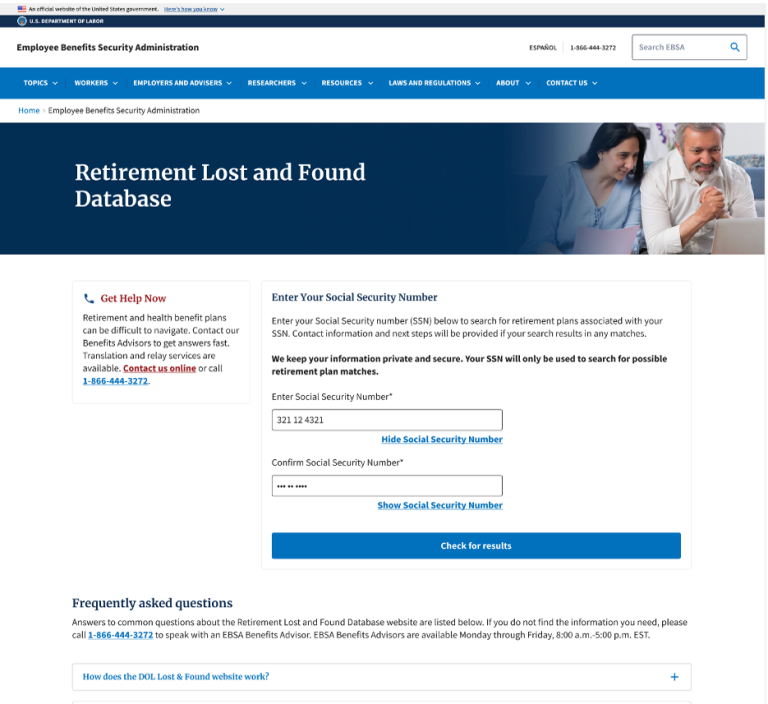

Our WebFirst CX team has conducted multiple rounds of usability research and testing for DOL’s newly launched ‘Retirement Savings Lost and Found’ database. DOL’s This online portal responds to a SECURE 2.0 provision that required DOL to create a service that helps employees find missing retirement accounts. Ultimately, the Retirement Savings Lost and Found database will helpenable American’s workers to locate lost retirement savings they have earned from past jobs.

Usability and ‘Experience’ Testing

There’s more than one flavor of user research and testing for usability factors. Especially when looking to ‘test’ the performance of a digital service, multiple touchpoints are investigated; and uncovering user perceptions of and expectations for the service is critical knowledge.

In the case of the benefits recovery service, the end service product was proposed via the SECURE 2.0 provisions. It was a solution proposed with a lack of investigation into the problem. While on the surface — and as expected — users would be appreciative of the value; the American public had not framed it as a problem.

Adding other barriers to ‘entry,’ it was unclear how users would navigate the integration of proper security measures, especially within an experience they were unfamiliar with. Could they understand the value? Would the potential value be worth the extra security steps?

User Research Touchpoint 1

Our first user test study set out to answer those questions. We structured the study as a series of four small focus groups, each with three participants (who met predefined recruiting criteria).

The study structure encouraged open dialog between participants to allow qualitative feedback to surface through natural conversation. To prompt the conversation, our moderator’s questions probed for emotional responses. We asked empathy-based questions with sincere curiosity like:

- “How do you feel about using this search to look for potential retirement plans?”

- “Anything excite you about this service?”

- “Can you explain what about this website makes you feel it’s trustworthy and want to use it?”

- “What, if anything, about this website makes you feel doubtful or not want to use it?”

“I need more information to feel safe. Many scams exist with email, text, and mail.”

“Setting up a Login.gov account may be too complicated on a mobile device.”

Our first round of research showed participants were appreciative of the site’s purpose and receptive to increased levels of security for identity verification. When asked, “On a scale of one to ten: how would you rate your experience on this website overall? (One being very poor and ten being very good),” 83% of participants responded positively. The majority felt the site “looks and feels” like a government website and that increased their confidence towards the entering their social security numbers.

Based off the participant responses, we made the following recommendations for improving the portal’s credibility and building trust:

- Implement a more seamless experience for the Login.gov integration so the user feels connected to the current site experience

- Provide additional links/supplemental information for users to learn more about EBSA and include an additional FAQ section for more detail on the site’s origin

Other recommendations addressed common remarks from participants about the too-large size of the marquee/hero image and apprehension about potentially receiving unsuccessful or no results.

User Research Touchpoint 2

The second user research round focused on validating the updated user experience, with special attention towards whether prior legitimacy concerns were satisfied. We shifted the study structure for this round and conducted fifteen 1:1 moderated sessions. Our CX Lead and Researcher, moderated online video sessions with each participant and asked each to perform a series of tasks.

The task-based study approach ‘tested’ the new experience across mobile and desktop delivery. We tested the portal design’s ability to successfully scaffold the service end-to-end, including a new, integrated Login.gov step. Through the interview, our CX Lead encouraged the “‘talk out loud”’ approach as participants engaged with the user interface, and he observed the effectiveness of the portal’s instructional text.

As participants encountered the steps that integrated Login.gov identify verification, we observed and noted users’ acceptance of the security identification steps. Unlike the first user research round, this study split the users’ search results between “‘happy”’ (results) and “‘unhappy”’ paths (lack of results).

Findings from the second round of user research demonstrated improved user satisfaction with the more tightly integrated Login.gov steps and validated the updated design met users’ expectations for establishing trust and credibility. The majority of participants highlighted the ease of use, familiarity with the Login.gov process, and excitement about the potential to find funds of their own.

“I know doing the Login.gov step is tedious, but it makes me feel safe.”

“I already have a Login.gov account, so that’s easy. This (Login.gov) tells me it’s safe.”

Future Plans and Monitoring

The study approach also uncovered feedback related to the ‘unhappy’ path. Specifically users voiced their uncertainty about next steps and that the requested instructions be more informative. They requested the content indicate a timeline for when to “check back” or try again versus leaving next steps as open-ended. This feedback around the “No results” outcome gave our team a data point to monitor and potentially respond to post-site-launch.

The portal site launched on December 25, 2024 and is equipped with site analytics that our team will lean on to source indicators of potential design optimization efforts. On an initial weekly, then tapered to monthly, basis, our CX Lead monitors the site analytics from a funnel perspective. As the portal gains more data and servicing capabilities, we anticipate an increase in its traffic. Our primary concern is monitoring for drop offs and responding to any patterns we detect that indicate user pain points across the process.

Interested in User Research?

Our CX team at WebFirst has several UX research staff who are trained in research methodologies and human centered design tenets. We know there’s no one-size-fits-all approach to gaining user feedback or for involving your users in the design process of your products or services. Our experiences with and options for structuring productive research engagements include behavioral psychology, usability testing, performance testing, A/B optimization tests, focus groups, moderated groups, 1:1 user interviewing, and ethnographic and desk research. Regardless of study goals, approach, and structure, our team’s goals are to gather real user data and generate actionable, data-driven insights.